My limited knowledge of hardware design (very limited) only allows me to speculate, but I believe the reason as to why Cerny went with the smaller APU is related to thermal and power envelopes, and the desire to clock it faster. I suspect that due to the constraints of a console APU, having fewer compute units would allow for a faster clock rate within the same budget. The faster clocks help overall tasks do be performed faster (duh) as long as occupancy remains high.

Talking about occupancy and even memory, there are fundamental design differences between the PS5 and the Series X, architecture wise. The sentence "narrow and fast" and "wide and slow" are applicable here.

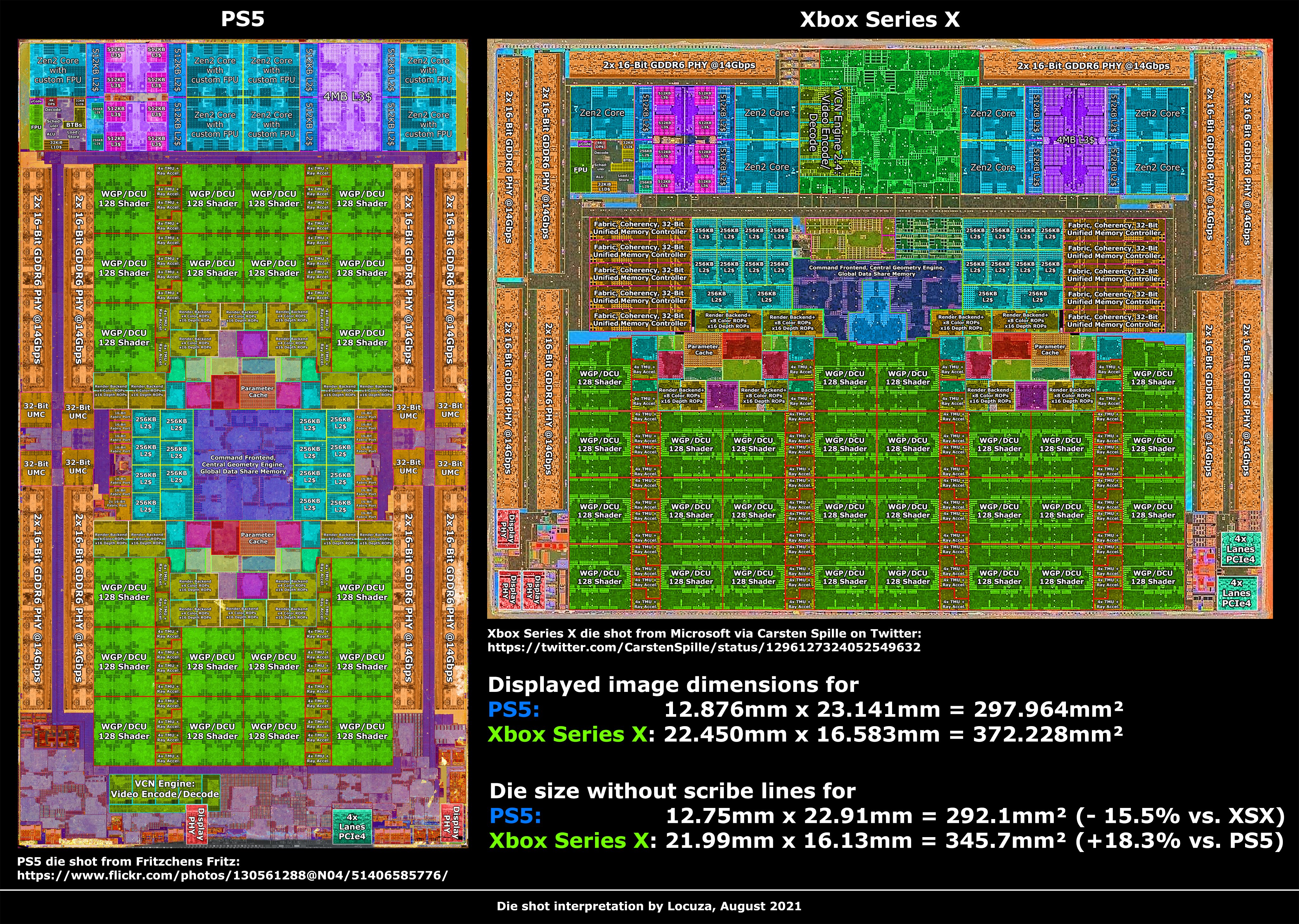

The PS5 GPU is as follows

- 2 Shader Engines with 2 Shader Arrays each

- Each Shader array has 10CU

- 4MB L2 cache, which I believe is split in half between shader engines

- 2 pairs of CUs are disabled for yields for a total of 36 active CUs

The Xbox Series X however is as follows

- 2 Shader Engines with 2 Shader Arrays each

- Each Shader Array has 14CU

- 5MB of L2 cache with the same conditions as the PS5

- 2 pairs of CUs are disabled for yields, for a total of 52 active CUs

On a first look the Xbox seems to have the advantage, however when you look deeper into the subject, you can tell that their wide approach (more compute units per shader array), as well as the smaller amount of L2 per CU (0.111MB/CU on the PS5 vs 0.096MB/CU on the Series X), and couple it with the slower clock, may prove to be a disadvantage.

RDNA2 cards kept with the 20 CU per Shader Engine scheme, or 10 per array, so even AMD privileges the more narrow design (here's the floor plan for the Navi21)

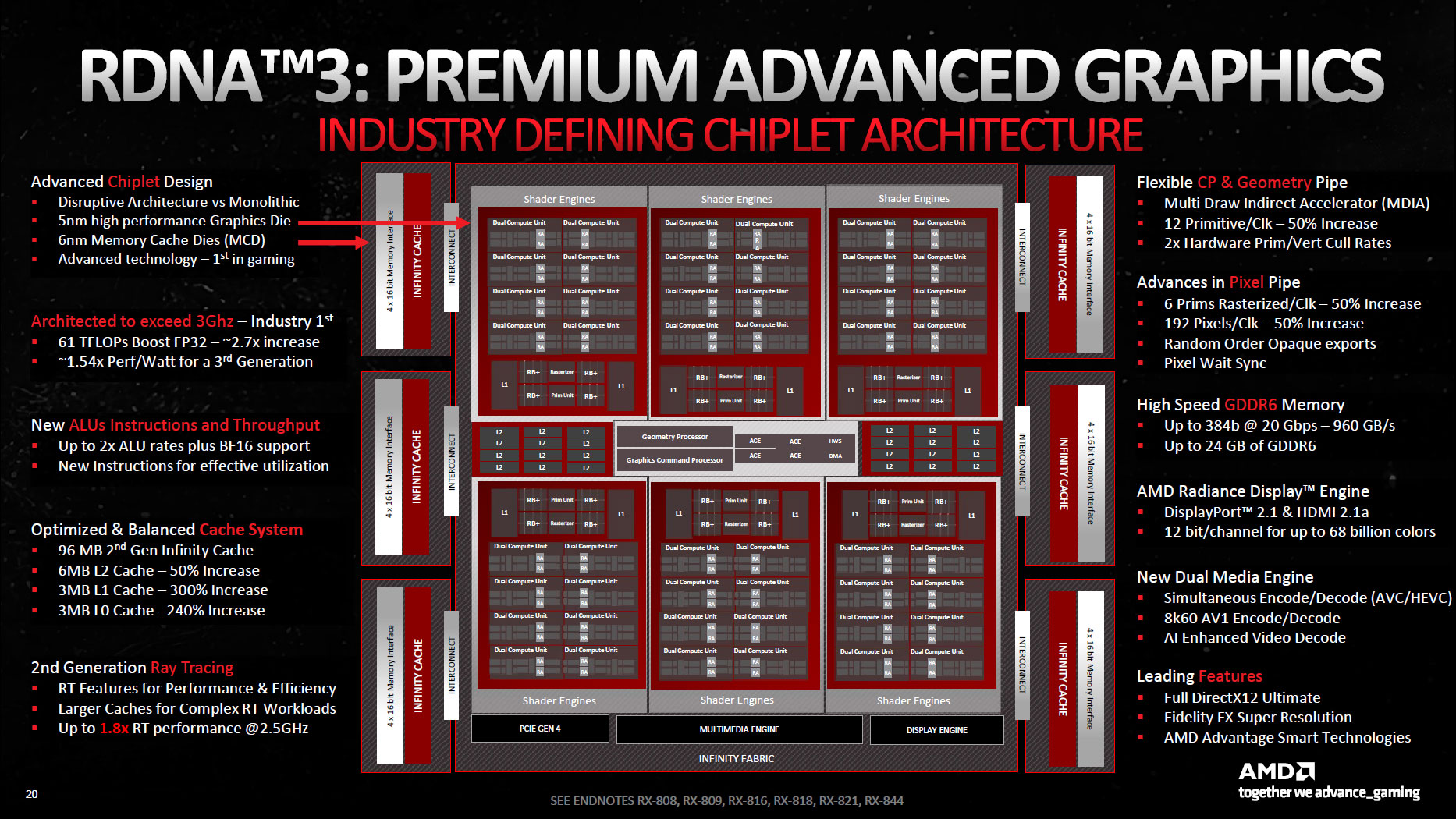

The same is applicable to RDNA3 cards, keeping under the 20 CU per Engine / 10 per array

The thing is, people should have never expected the PS5 to win resolution battles. I said this plenty of times, but the raw "grunt" of the Series X should allow it to produce higher resolutions with less "side-effects" when compared to the PS5. The strengths of the later are shown on efficiency: you often have games that perform better / more stable on the PS5, and with a small resolution decrease. Of course engines will prefer different things, so your results may vary, but this is the case more often than not. Additionally, when looking at architectural differences, I believe the Series X cannot achieve those extra frames it sometimes drops simply by targeting resolution, meaning it has an occupancy issue somewhere.

Overall the PS5, from an hardware standpoint, is a better optimised console overall IMO. Additionally, I must add that the "variable clocks" shenanigans was very poorly explained and something people still fail to understand. It was never about "underclocking" the machine, but rather to use a variable power envelope as opposed to a fixed one. A practical example as to why this is, in theory at least, better.

Console A has a fixed power envelope of 200W, with 120W dedicated to the GPU and 80W dedicated to the CPU. Console B has the same fixed power envelope, however it can starve the CPU or GPU of power if the other needs it. The more "math" you run, the more power you need, so ideally you want to have as many transistors firing as possible at once (you never reach 100%, which is your theoretical TFLOP measure). So, how does it happen. When constructing a frame, systems can parallelise their math between CPU and GPU when constructing a frame (long were the times were this was not possible).

Now, if your target is 16ms for a smooth 60 fps, you need both to complete their task under that time, at the same time. Let's now imagine your CPU can construct a frame in 6ms, but your GPU, due to lack of occupancy or power, can only complete said frame in 18ms? You're already below target, and are wasting precious CPU cycles simply waiting for the GPU. When coding for console A, you will either have to reduce the load on GPU, or assume slower performance. On Console B, if the issue is related to power, you can simply direct most of the power from your CPU (which has completed its task) to the GPU (to ensure it constructs the frame in time). These things happens several times per millisecond, and can happen interchangeably.

So, you not only have a console that runs at a higher clockspeed, has more L2 memory per CU and can fill them more easily with work (due to the smaller chain), but you also have a system that intelligently diverts power to where its needed to prevent stalls (assuming your problem is power budget of course).

Apologies for the rant.

:max_bytes(150000):strip_icc()/monopsony.asp-final-b5584ce2bc8c49a19f26b742abd82b8a.png)