For those of us whom are not technically minded, what are some layman's explanations for proportionate PS5/Pro comparison? E.g. "Pro will cold load a game faster, shaving off nth seconds" or "download/install --> play time is quicker, it'll handle this size game going through that process at this amount of time".

PS5 Pro updated rumors/leaks & technical/specs discussion |OT| PS5 Pro Enhanced Requirements Detailed.

- Thread starter Gamernyc78

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

One thing I'm finding... Curious about these leaks is that they keep stating that the card has 30WGP with a total of 60CU.

To understand why I find this curious, I want to point out something about RDNA architecture.

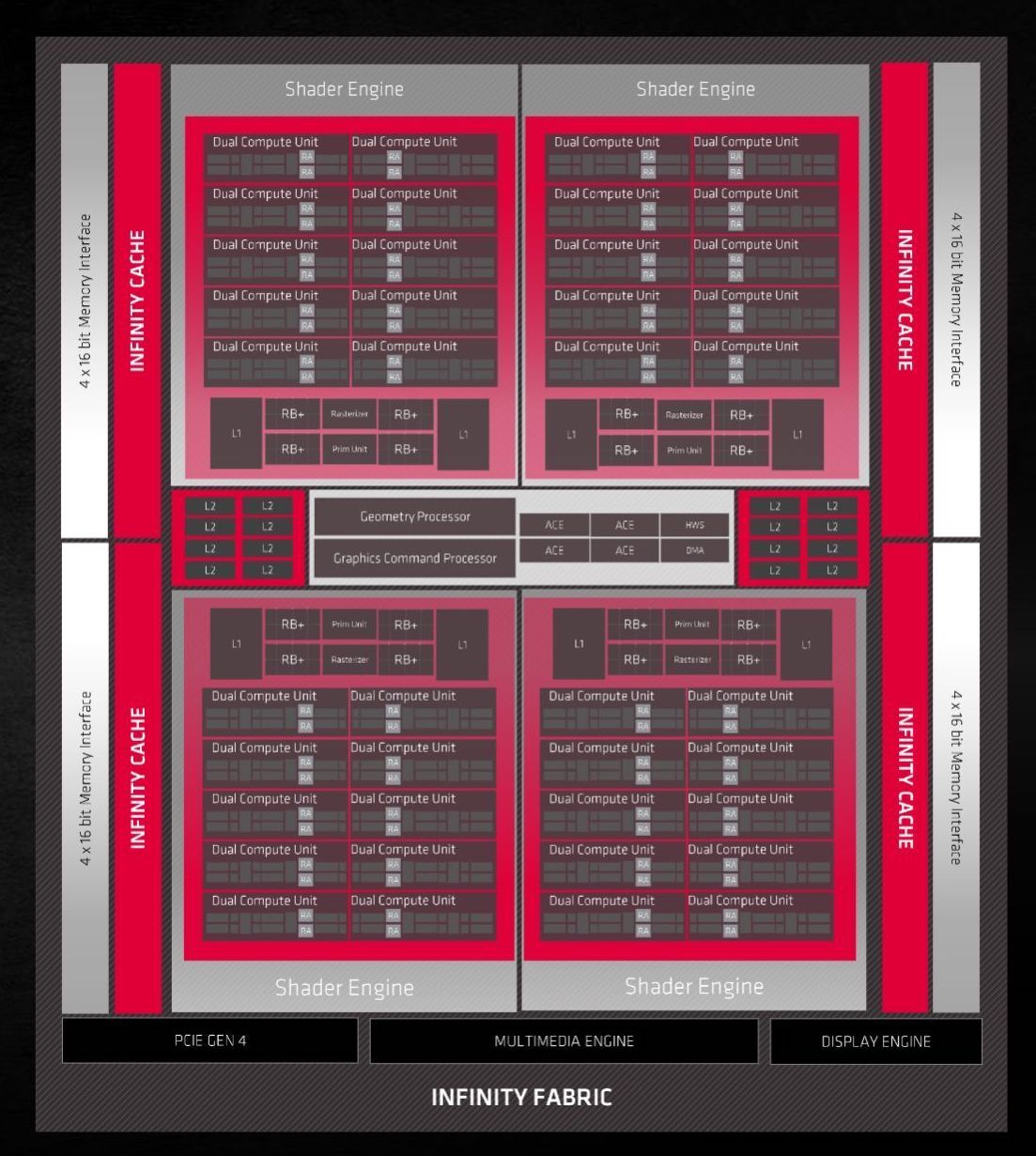

Now when looking at RNDA3 floorplans, we only have ONE GDC that they could possibly be using if keeping with AMD's standards, the Navi 32.

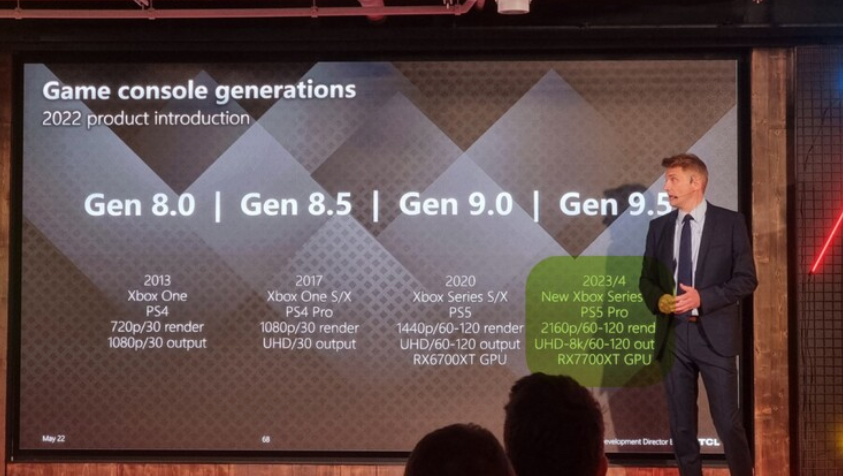

That said, there are two possible combinations I can see here - It will have either 28 or 27 enabled WGP, for a total of anything between 54 and 56 CUs. Funny enough, there is a card that matches the 54 CU number perfectly, the Radeon RX 7700 XT.

Now we know the compute unit targets, what else? Well, we do not know the clockspeed, but we do know the supposed teraflop numbers (dual issue) 67 TF fp16 / 33.5 TF fp32 - Like for like, this will put us at around 16.75 TF when compared like for like with the standard PS5. This will now allow us to calculate the actual clockspeed for the GPU:

Compute Units x Shaders per CU x Clockspeed x 2 = Teraflop

Funny enough, when "Oberon" was announced people were talking about how the PS5 was 9.2TF because they assumed the console would be 36CU based on the Navi10 nomenclature. What's even funnier is that if you calculate Teraflops based on the PS5 "oberon" having 40CU you get...

40 x 64 x 2GHz x 2 = 10.24 TF, which is incredibly close to the final figure we got from Sony.

My personal bet goes to this console having 54 enabled Compute Units running at 2.42 GHz

Edit - I also find it funny that the "expert" Kepler_l2 mentioned that the card full config has 64 CU organised differently from what we'd expect from RDNA 2 or 3. To put things in perspective, each shader engine usually has 10 WGP, this would require 2 of them to have 16 each. That would be a terrible engineering decision considering how caches are laid out in RDNA cards - and unless Cerny found some gold somewhere, this would be antithetical to his efficient designs.

However then says that 300 tops suggests a clockspeed of 2.45 GHz (pure coincidence that this pretty much matches my math)

So which is it? I will keep on calling BS on the 2 shader engine configuration since there's no RDNA 3 card with it other than the tiny Navi 33

Edit - If anyone asks, one of the main issues with the Series X hardware architecture is that the shader arrays are simply "too long" for the caches they have - there are efficiency losses the further away a compute unit is from it's L1 cache. The PS5 has 4 shader arrays with 10 CU each, the Series X has 4 shader arrays with 12 CU each, leading to further efficiency losses. I can only begin to imagine 4 shader arrays with 16 compute units each and how dumb that would be.

I'm perfectly ok If I'm wrong, but I'm very curious to the configuration itself, much more than tittyflops

Wdit again - Someone shared this image from apparently AMD a while back, and if it's real, it gives credence to my theory on the 7700xt / 54CU machine

To understand why I find this curious, I want to point out something about RDNA architecture.

- Work Group Processors (WGP) are comprised of two Compute Units (CU) each

- You then have Shader Engines, each with a set amount of WGPs

- 20 WGP / 40 CU - 2 WGP are disabled for yields

- 2 Shader Engines, each with 10 WGP

Now when looking at RNDA3 floorplans, we only have ONE GDC that they could possibly be using if keeping with AMD's standards, the Navi 32.

- 30 WGP / 60CU

- 3 Shader Engines, each with 10 WGP

That said, there are two possible combinations I can see here - It will have either 28 or 27 enabled WGP, for a total of anything between 54 and 56 CUs. Funny enough, there is a card that matches the 54 CU number perfectly, the Radeon RX 7700 XT.

Now we know the compute unit targets, what else? Well, we do not know the clockspeed, but we do know the supposed teraflop numbers (dual issue) 67 TF fp16 / 33.5 TF fp32 - Like for like, this will put us at around 16.75 TF when compared like for like with the standard PS5. This will now allow us to calculate the actual clockspeed for the GPU:

Compute Units x Shaders per CU x Clockspeed x 2 = Teraflop

- 54 x 64 x ? x 2 = 16.75 | ? = 16.75 / (54 x 64 x 2) | ? ≈ 0.00242, or 2.42 GHz

- 56 x 64 x ? x 2 = 16.75 | ? = 16.75 / (56 x 64 x 2) | ? ≈ 0.00234, or 2.34 GHz

Funny enough, when "Oberon" was announced people were talking about how the PS5 was 9.2TF because they assumed the console would be 36CU based on the Navi10 nomenclature. What's even funnier is that if you calculate Teraflops based on the PS5 "oberon" having 40CU you get...

40 x 64 x 2GHz x 2 = 10.24 TF, which is incredibly close to the final figure we got from Sony.

My personal bet goes to this console having 54 enabled Compute Units running at 2.42 GHz

Edit - I also find it funny that the "expert" Kepler_l2 mentioned that the card full config has 64 CU organised differently from what we'd expect from RDNA 2 or 3. To put things in perspective, each shader engine usually has 10 WGP, this would require 2 of them to have 16 each. That would be a terrible engineering decision considering how caches are laid out in RDNA cards - and unless Cerny found some gold somewhere, this would be antithetical to his efficient designs.

However then says that 300 tops suggests a clockspeed of 2.45 GHz (pure coincidence that this pretty much matches my math)

So which is it? I will keep on calling BS on the 2 shader engine configuration since there's no RDNA 3 card with it other than the tiny Navi 33

Edit - If anyone asks, one of the main issues with the Series X hardware architecture is that the shader arrays are simply "too long" for the caches they have - there are efficiency losses the further away a compute unit is from it's L1 cache. The PS5 has 4 shader arrays with 10 CU each, the Series X has 4 shader arrays with 12 CU each, leading to further efficiency losses. I can only begin to imagine 4 shader arrays with 16 compute units each and how dumb that would be.

I'm perfectly ok If I'm wrong, but I'm very curious to the configuration itself, much more than tittyflops

Wdit again - Someone shared this image from apparently AMD a while back, and if it's real, it gives credence to my theory on the 7700xt / 54CU machine

Last edited:

Thank you for the explanation Mr. Greenberg, I didn't realize you were still around.What are you talking about? Reconstructed 4k is still 4k, just like heavily compressed 4k video is still 4k.

Thank you for the explanation Mr. Greenberg, I didn't realize you were still around.

Damn, can you lie just a little more and be even more dishonest? Reconstructed 4k is not the same as outputting a 1080p feed into a 4k display - Edit: When outputting 1080p content in a 4k TV, each pixel is represented 4 times to account for the 4x resolution increase in 4k.

I'm not going to be explaining several types of image reconstruction techniques, but the important is that the output, no matter what your baseline is, will always be of a higher resolution and of a higher fidelity due to reconstruction techniques. This is not simply grabbing a 1080p signal and just having it output on a 4k tv.

And you know what's even better and funnier? Reconstructed 4k can look better and more detailed than compressed raw 4k (meaning you grab a raw file and compress it to save space). Fantastic, right?

Last edited:

I completely realize this, but trying to conflate a heavily reconstructed image as 4K without notation is just as intellectually dishonest.Damn, can you lie just a little more and be even more dishonest? Reconstructed 4k is not the same as outputting a 1080p feed into a 4k display - Edit: When outputting 1080p content in a 4k TV, each pixel is represented 4 times to account for the 4x resolution increase in 4k.

I'm not going to be explaining several types of image reconstruction techniques, but the important is that the output, no matter what your baseline is, will always be of a higher resolution and of a higher fidelity due to reconstruction techniques. This is not simply grabbing a 1080p signal and just having it output on a 4k tv.

And you know what's even better and funnier? Reconstructed 4k can look better and more detailed than compressed raw 4k (meaning you grab a raw file and compress it to save space). Fantastic, right?

@Satoru wasn't conflating reconstruction with full resolution rasterization. But conflating image reconstruction with display device upscaling is intellectually dishonest.I completely realize this, but trying to conflate a heavily reconstructed image as 4K without notation is just as intellectually dishonest.

I completely realize this, but trying to conflate a heavily reconstructed image as 4K without notation is just as intellectually dishonest.

I did not try to conflate anything, I'm just stating a fact - Reconstructed 4k is 4k, no matter how much you spin it, and you trying to equate my statement to greenburger saying "a 1080p output is the same" is dishonest to say the least.

As far as reconstructing image, the quality and resolution of your source matter a lot more than the image being reconstructed. Here's an example that occurs in video and that you can try yourself

- Grab a RAW 4k video and compress it with a ridiculously high compression ratio - your output will be shit, even though your image will be "real 4k" by your definition, and there's no way around it

- Now grab RAW 1080p of the same video and run it through something like topaz and reconstruct it to 4k - Your output will be much clearer than the "real 4k" video, despite it being "fake" (so to speak) by your definition

- Edit: Your output in RAW 1080p may already be clearer than the compressed 4k, but my point stands.

I thought the same tweet when I read his commentThank you for the explanation Mr. Greenberg, I didn't realize you were still around.

@Satoru wasn't conflating reconstruction with full resolution rasterization. But conflating image reconstruction with display device upscaling is intellectually dishonest.

Did I say him/you? No I did not. You guys can't see the forest for the trees here.I did not try to conflate anything, I'm just stating a fact - Reconstructed 4k is 4k, no matter how much you spin it, and you trying to equate my statement to greenburger saying "a 1080p output is the same" is dishonest to say the least.

As far as reconstructing image, the quality and resolution of your source matter a lot more than the image being reconstructed. Here's an example that occurs in video and that you can try yourself

Cut the bullshit. And if you're interested:

- Grab a RAW 4k video and compress it with a ridiculously high compression ratio - your output will be shit, even though your image will be "real 4k" by your definition, and there's no way around it

- Now grab RAW 1080p of the same video and run it through something like topaz and reconstruct it to 4k - Your output will be much clearer than the "real 4k" video, despite it being "fake" (so to speak) by your definition

- Edit: Your output in RAW 1080p may already be clearer than the compressed 4k, but my point stands.

When people are posting linked stuff or saying "I heard from someone testing at EA that Jedi Survivor is running at 4K 120hz without a hiccup" without any form of notation that it's not actually 4K it needs to be called out.

I guess "It's running at 4K with DRS and PSSR with Frame Generation" doesn't have the same ring to it...

Last edited:

The same people who were jerking off to DLSS 4k reconstruction are now shitting on PSSR 4k reconstruction.

You love to see it.

You love to see it.

I guess this image as a reply to my comment was a coincidence then.Did I say him/you? No I did not. You guys can't see the forest for the trees here.

Thank you for the explanation Mr. Greenberg, I didn't realize you were still around.

When people are posting linked stuff or saying "I heard from someone testing at EA that Jedi Survivor is running at 4K 120hz without a hiccup" without any form of notation that it's not actually 4K it needs to be called out.

I guess "It's running at 4K with DRS and PSSR with Frame Generation" doesn't have the same ring to it...

Why does it matter that it has DRS / PSSR / DLSS, whatever? a) the presentation is still at 4k and b) nobody gives two fucks as long as image quality is good and performance is stable. I'm sure you have a magnifying glass very close to you to look at those pixels upclose, probably between failed attempts at finding your incredily small penis.

You failed to prove that reconstructed 4k =/= 4k, you then tried to move the goal post and implying that I somehow don't know what image reconstruction is, and now are changing your tune again because there's a load of tools being used to achieve an outcome.

Next thing we'll move our goalpost to "yeah, Horizon Forbidden West may run in 4K at 30fps, but there's Frustum culling so it's not real 4k. Oh, and their fire effects are also at quarter resolution, same as reflections, so guess what, it's not real 4k too!"

So we go from "Game X is running at 4k 120fps" to "Game x is running at 4k 120fps with DRS, PSSR, Frame Generation, Frustum culling, quarter resolution reflections, SOHC VTEC, etc".

You're just digging a bigger hole every time, please continue.

DLSS Quality (1440p to 4k) is fine.The same people who were jerking off to DLSS 4k reconstruction are now shitting on PSSR 4k reconstruction.

You love to see it.

DLSS Performance (1080p to 4k) not.

I still prefer native with good AA implementation but I know DLSS saves a lot of resources... even more in the good AA implementation because most devs uses crap AA (due performance).

You could say the same about people praising DLSS as if it's magical...I guess "It's running at 4K with DRS and PSSR with Frame Generation" doesn't have the same ring to it...

First of all, I think DF has it wrong where they mention some custom Sony block for Machine Learning.

The only thing they have in the document is "300 TOPS ML Performance". This is simply regular RDNA3 matrix processing using INT4 variables at 8x the speed of FP32. FP16 = 2xFP32, INT8 = 4xFP32; INT4 = 8xFP32.

In this case, if the PS5 Pro's GPU is running at 2450MHz, we're getting:

60 CUs x 64 ALUs-per-CU x 2 double-ALUs x 2 FP32 MAD OPs-per-ALU x 8 INT4_OPs-per-FP32_OP x 2450MHz = 301 TOPs.

They also mention ML performance as being capabilities of the GPU.

Alex as usual loves shitting on AMD, but everything points to the ML hardware capabilities being simply what AMD already has to offer on RDNA3.

Then he wonders what is responsible for the raytracing performance upgrade, when it's clear in Tom Henderson's leak that the new RT units process BVH8, i.e. at the very least they do twice the BVH intersections per CU.

Also, at ~51m30s in the video:

Guys for the love of god, never ask Alex Battaglia about PC component upgrades.

And this isn't even a game that pushes above 8GB VRAM at 4K maxed out.

People just need to stop using max theoretical TFLOPs as gaming performance metrics. If this wasn't obvious to everyone before we saw how the Series X doesn't run anywhere near 20% faster than the PS5, then it should be now.

This mistake isn't new, though. >20 years ago we were all counting pixel and texel fillrate as metrics to compare console performance. And although they're still very important now (probably the biggest reason why the PS5 matches the Series X in real-world performance is its advantage in fillrate and triangle setup), no one is looking at those numbers nowadays.

The only thing they have in the document is "300 TOPS ML Performance". This is simply regular RDNA3 matrix processing using INT4 variables at 8x the speed of FP32. FP16 = 2xFP32, INT8 = 4xFP32; INT4 = 8xFP32.

In this case, if the PS5 Pro's GPU is running at 2450MHz, we're getting:

60 CUs x 64 ALUs-per-CU x 2 double-ALUs x 2 FP32 MAD OPs-per-ALU x 8 INT4_OPs-per-FP32_OP x 2450MHz = 301 TOPs.

They also mention ML performance as being capabilities of the GPU.

Alex as usual loves shitting on AMD, but everything points to the ML hardware capabilities being simply what AMD already has to offer on RDNA3.

Then he wonders what is responsible for the raytracing performance upgrade, when it's clear in Tom Henderson's leak that the new RT units process BVH8, i.e. at the very least they do twice the BVH intersections per CU.

Also, at ~51m30s in the video:

If someone asked me "Alex I have a Ryzen 5 3600 and a RTX 2080, should I buy a RTX 4070?" I would say no. I would say look at the other aspects of your CPU... (...)

Guys for the love of god, never ask Alex Battaglia about PC component upgrades.

And this isn't even a game that pushes above 8GB VRAM at 4K maxed out.

It's 33.5 TFLOPs at 2.18GHz.yeah, it is 16,7TF.

People just need to stop using max theoretical TFLOPs as gaming performance metrics. If this wasn't obvious to everyone before we saw how the Series X doesn't run anywhere near 20% faster than the PS5, then it should be now.

This mistake isn't new, though. >20 years ago we were all counting pixel and texel fillrate as metrics to compare console performance. And although they're still very important now (probably the biggest reason why the PS5 matches the Series X in real-world performance is its advantage in fillrate and triangle setup), no one is looking at those numbers nowadays.

I don't think it will too.

There is no CPU for that... the same reason for UE Namite e Lumen not running in 60fps too.